NVMe MR-IOV - Lower TCO of IT System

How can the Falcon 5208 NVMe MR-IOV solution help organizations to lower the total cost of ownership (TCO) on IT?

We have previously discussed how Falcon 5208 NVMe MR-IOV solution ensures SSD performance and flexibility, you can read the blogs here. Falcon 5208 solution adopts PCIe for interconnection. With built-in PCIe fabric, it requires less hardware to achieve high-performance storage service in comparison to other NVMe-oF solutions. An MR-IOV solution also allows better utilization of expensive CPUs especially in virtual environments.

Today, a lot of storage services achieve performance with SPDK. SPDK was introduced by Intel® and its purpose is to reduce the software overhead in storage services. Nonetheless, it consumes CPU cycles to perform data movement in SPDK, meaning that some CPU cores are dedicated to storage services instead of running actual applications. We can expect more CPU cycles to be consumed for I/O handling purposes as SSD hardware advances. Although the processing power of a single CPU core is advancing, the scale does not match that of storage devices, meaning more CPU cores would be used to handle the I/O delivered by one single SSD in the future for any storage services that adopt SPDK.

In a 2021 Intel® SPDK NVMe-oF RDMA Performance report, 6 cores of Intel Xeon Gold 6230 CPU were used to deliver 5.6 million IOPS (4k random read). In Intel’s testing, 16 SSDs are installed on the target system and connected to two identical initiators through Mellanox Connect-X5 InfiniBand NIC, then perform the I/O tests from the initiators (see Intel® report). For enterprise and datacenter level computing systems nowadays, the average cost per CPU core is approximately 300 USD (mid-high end CPU), from Intel’s report we can calculate the CPU cost for delivering 5.6 million IOPS is approximately 1800 USD, and the CPU requirement will increase as we yearn for higher IOPS, the CPU cost in the future may increase by as much as 30% to keep up with higher spec SSDs.

In contrast, an MR-IOV architecture enables storage service that does not consume any CPU cycles. Under MR-IOV architecture, despite how many physical hosts you have, every virtual machine can access the physical NVM resource directly given NVMe virtual functions. Any VM in the system can process its own I/O and interruptions, users can utilize the CPU cores to run more virtual machines or applications instead of the storage services.

A Falcon 5208 system is capable of 12M IOPS without utilizing any CPU cores. In comparison, to reach this number with SPDK may require as many as 12 CPU cores (approximate with Intel’s result mentioned previously). Now multiply 12 cores by 300 USD, the saving on CPU is approximately 3600 USD. You may argue that using PCIe Gen 4 or Gen 5 CPUs could bring this cost down, but as long as SPDK is implemented, the CPU cost for storage service is never going to be zero.

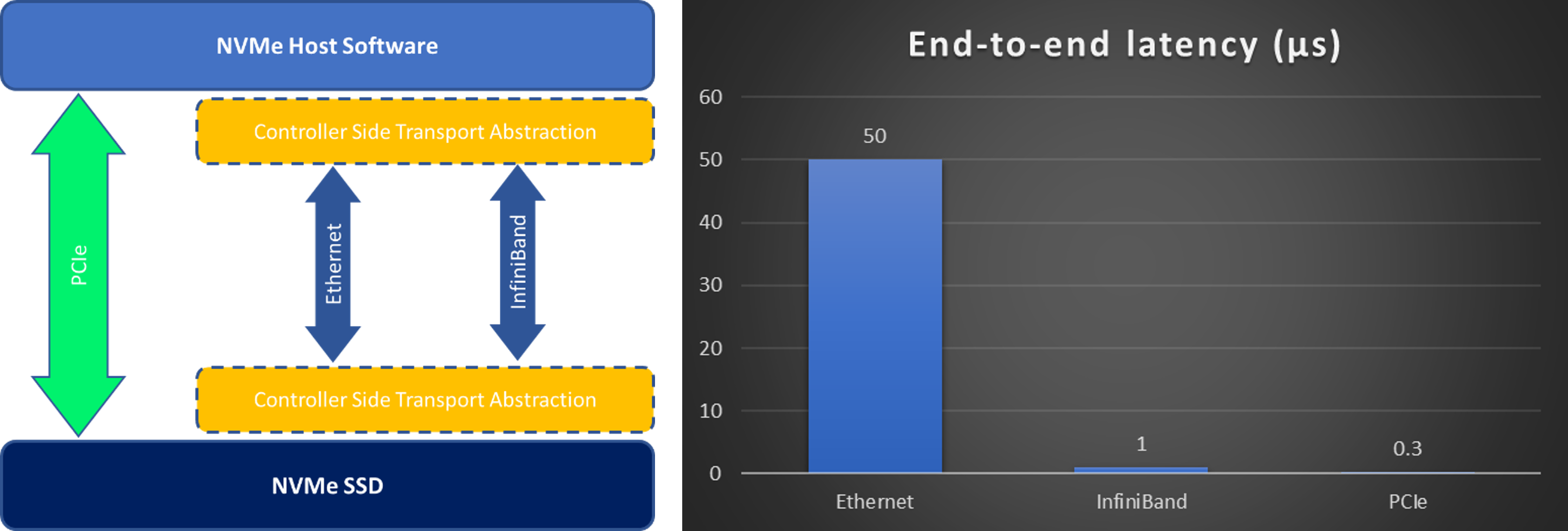

Another issue with common storage solutions is the high I/O wait time. Most high-performance storage solutions adopt fiber channel or InfiniBand for interconnection while Falcon 5208 NVMe MR-IOV solution adopts PCIe Gen 4 interface. NVMe devices have a direct PCIe interface, but for the NVMe over fabrics, it requires transport abstraction layers at both sides of the fabric interface to translate native PCIe transactions and disk operations, and these abstraction layers create much overhead in the system. In contrast, PCIe fabric allows native PCIe TLP to be forwarded automatically without protocol conversion, therefore the latency is much lower.

Combining the high-bandwidth and low-latency nature of PCIe, and the lower communication overhead, a storage system that adopts PCIe for interconnection can transfer data faster than any other existing protocols, thus the CPUs are able to process data more efficiently.

Compared to NVMe-oF, NVMe MR-IOV solution via PCIe fabric is able to deliver better performance in a virtual environment. It helps to extract the full capability of NVMe devices while enhancing CPU usage in data centers. NVMe MR-IOV solution not only lowers the system cost, but also improves the flexibility of IT resources.

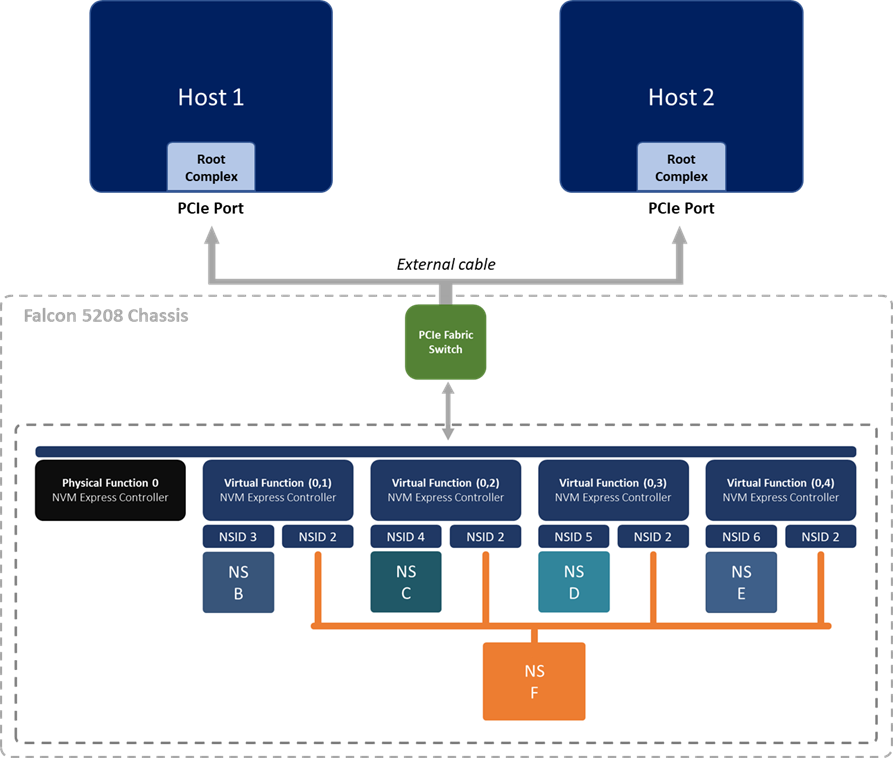

Last but not least, an NVMe MR-IOV solution helps to improve the SSD utilization by allowing more precise allocation of the memory space. Users can allocate the capacity, from megabytes to terabytes, by creating different namespaces. These namespaces can be attached to any SR-IOV virtual function(s) of the same SSD, allowing multiple hosts or VMs to read and write from the same physical SSD. In addition, the shared namespace property allows the different host to access exactly the same block of memory, meaning that it eliminates unnecessary copies of data in the storage system which leads to better usages of the capacity.

Shared namespace F in the diagram is attached to all virtual functions. The VFs can be assigned to different hosts, allowing access to NS F.

NVMe MR-IOV is an all rounded solution for rack-scale deployment. It secures SSD performance and flexibility at a lower overall cost. Applications such as AI development, VDI deployment, cloud computing, render farm…etc. could be greatly benefited from the NVMe MR-IOV architecture.