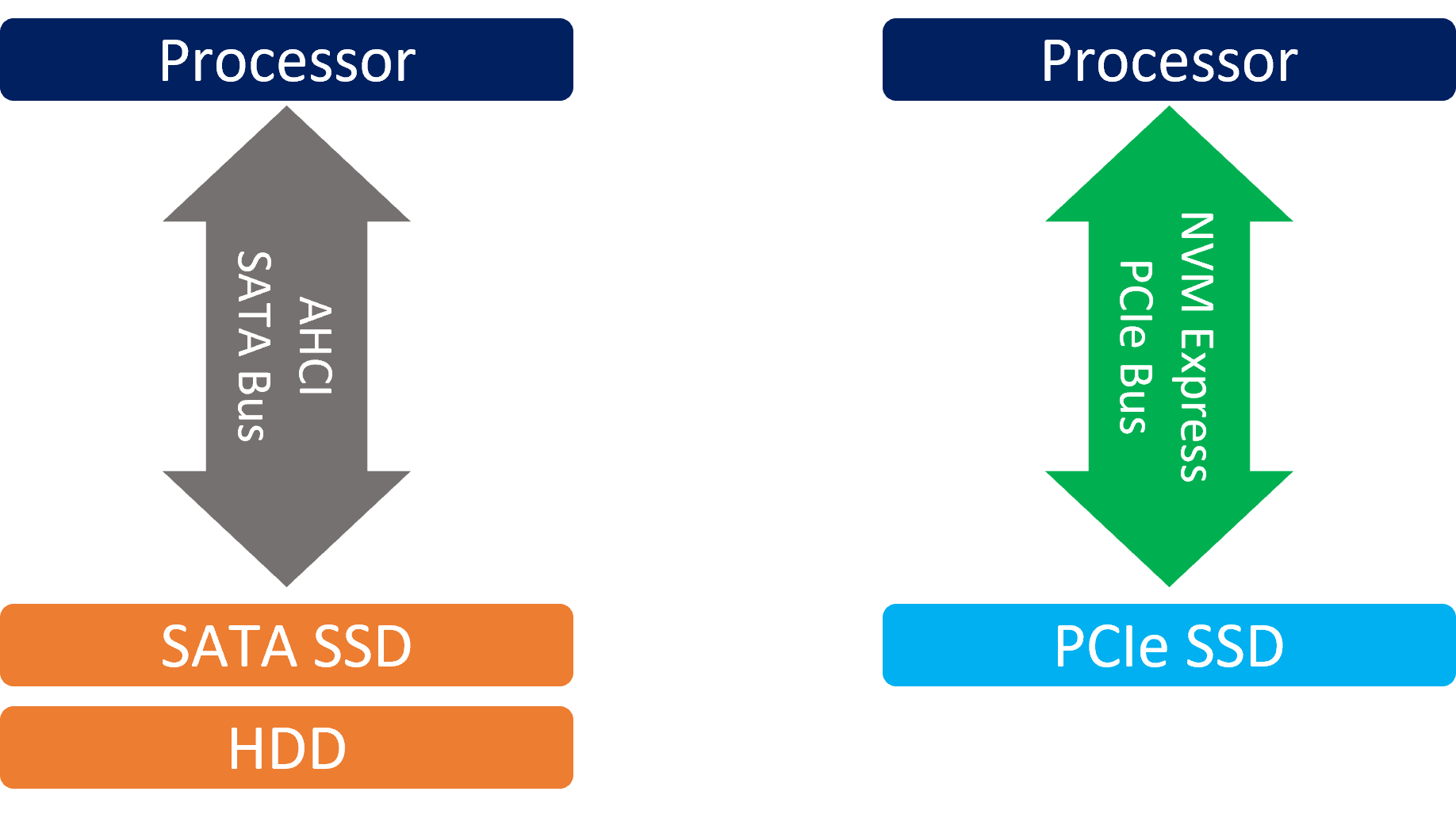

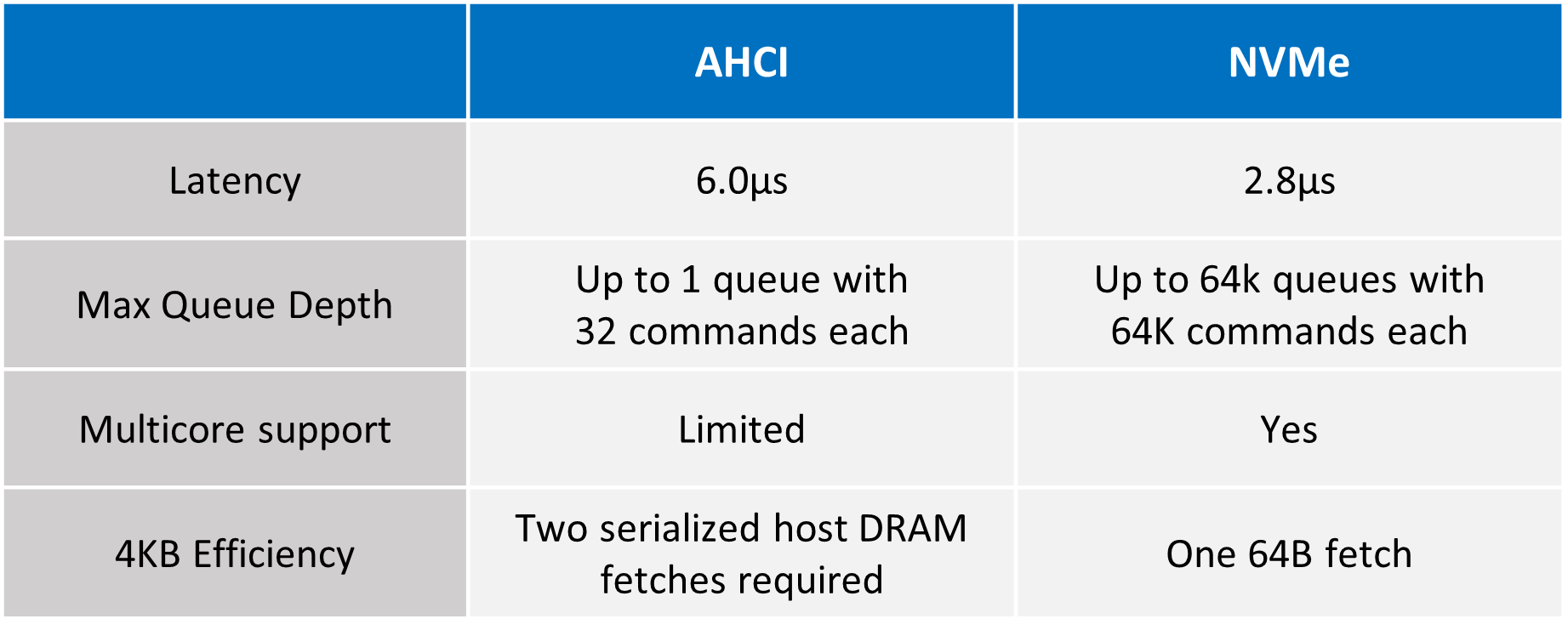

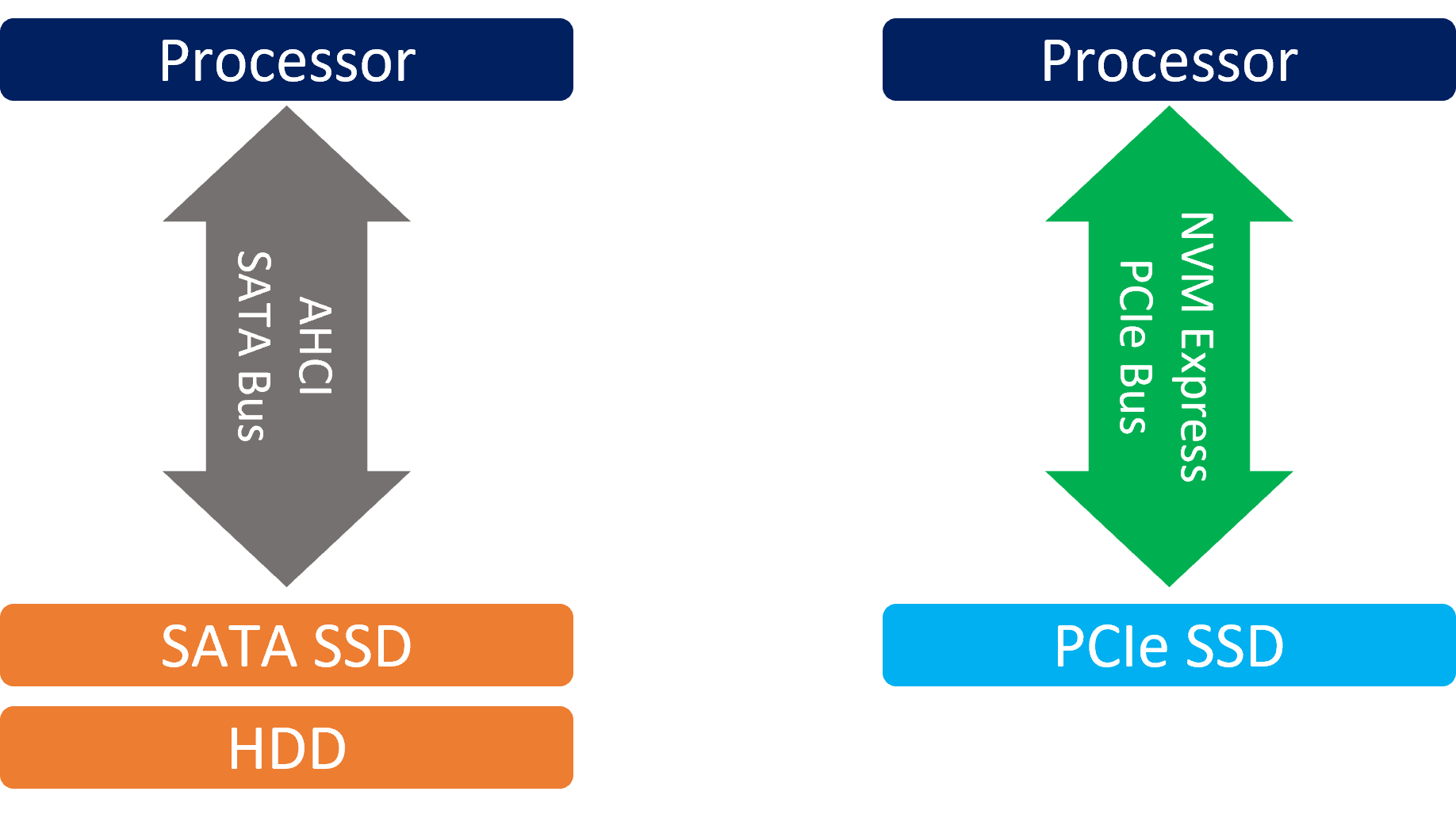

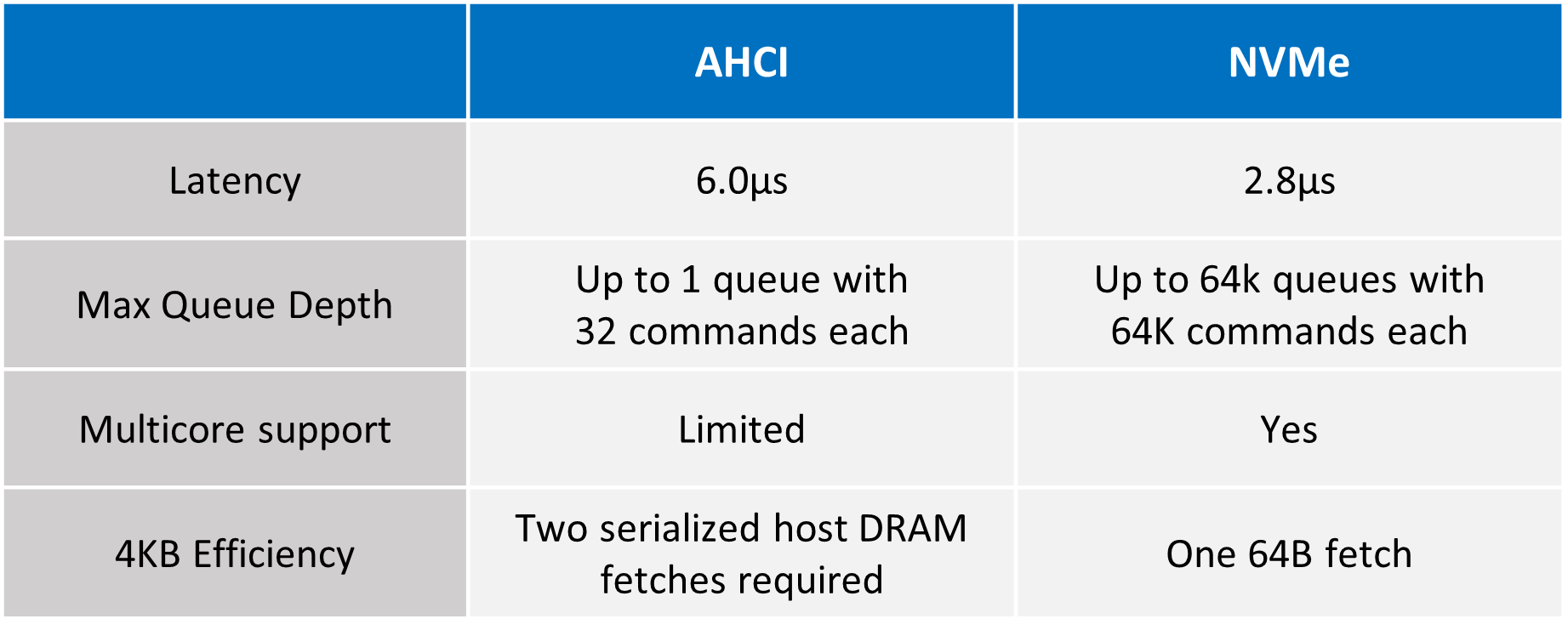

Storage technology evolves quickly in the past few years not only to accommodate the rapid growth in data volume, but also to speed up the access to the stored data. Traditional HDD and AHCI protocol have been struggled in meeting high-performance requirements, however, the capacity HDD offers at much lower cost still makes it a good choice for storing massive data at the very backend. NAND Flash and NVMe (Non-Volatile Memory Express) on the other hand is the emerging storage media and protocol to serve high-performance needs in datacenters. The NVMe protocol leverages PCI Express, unlike AHCI that transfer data through SATA, streamlined register interface and command set and directly transfer data through PCIe buses reduces I/O overhead due to protocol translation. Moreover, PCIe offers higher bandwidth and lower latency for communications between processors and storage media.

Source: https://www.anandtech.com/show/7843/testing-sata-express-with-asus/4

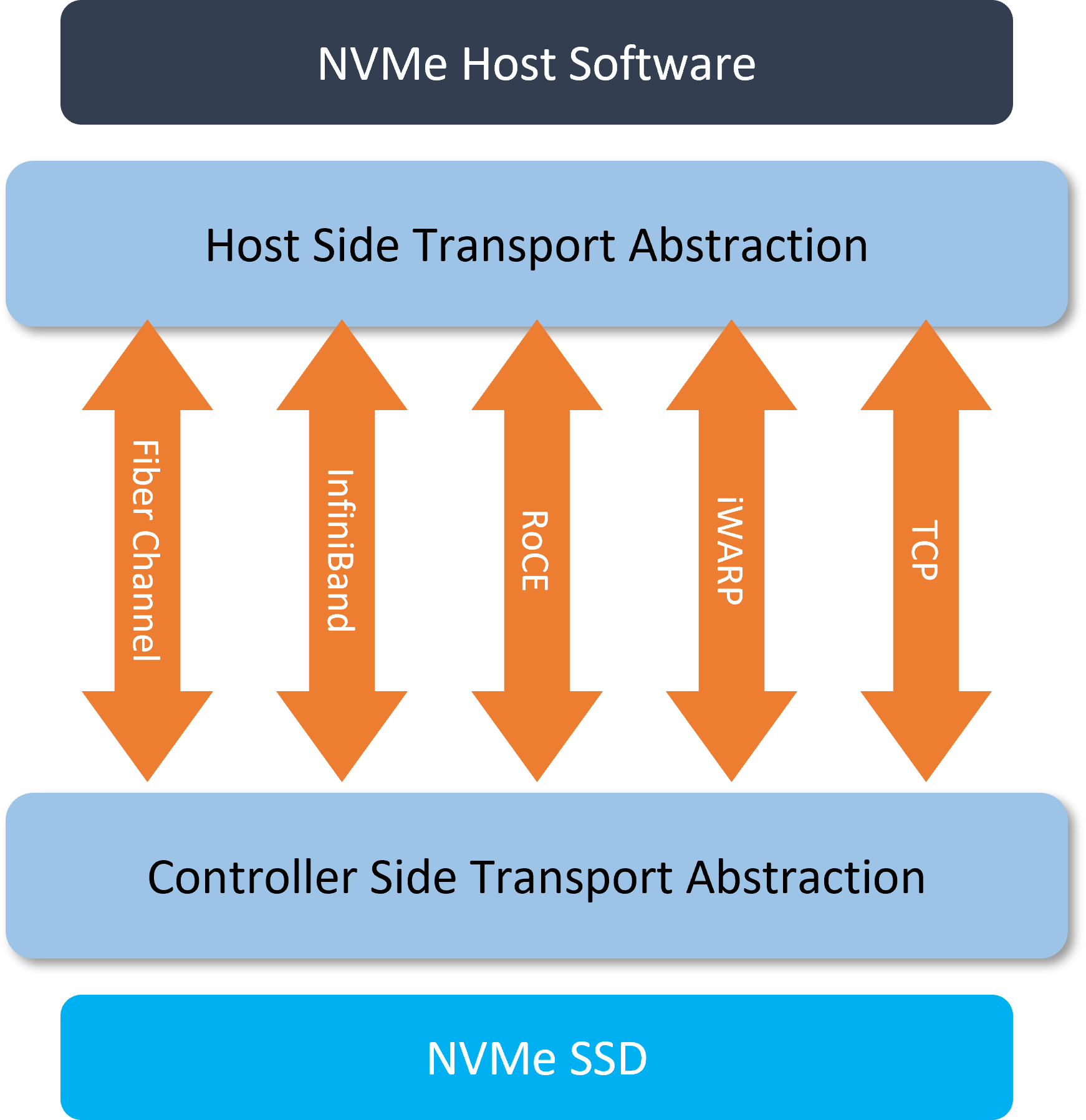

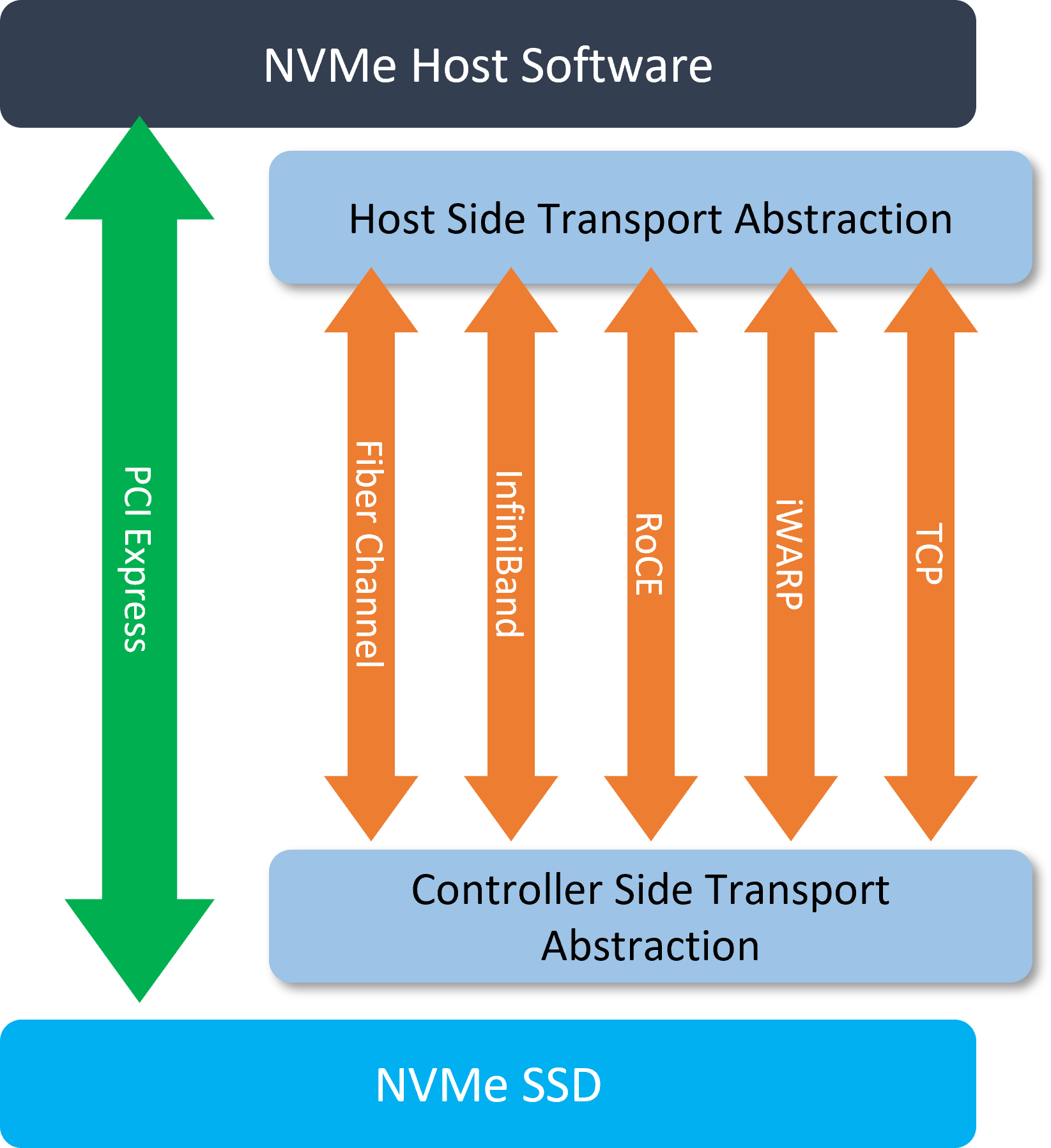

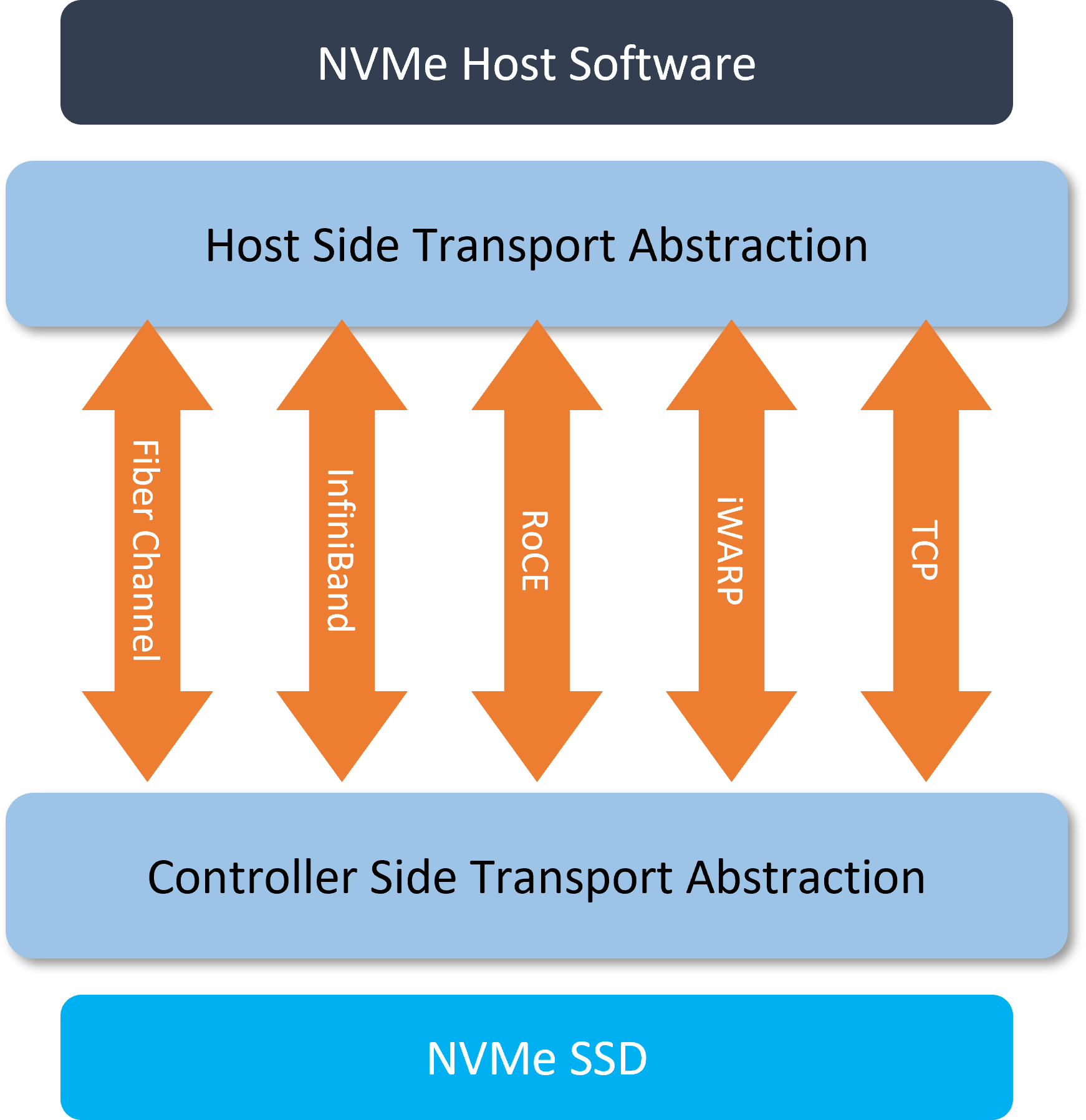

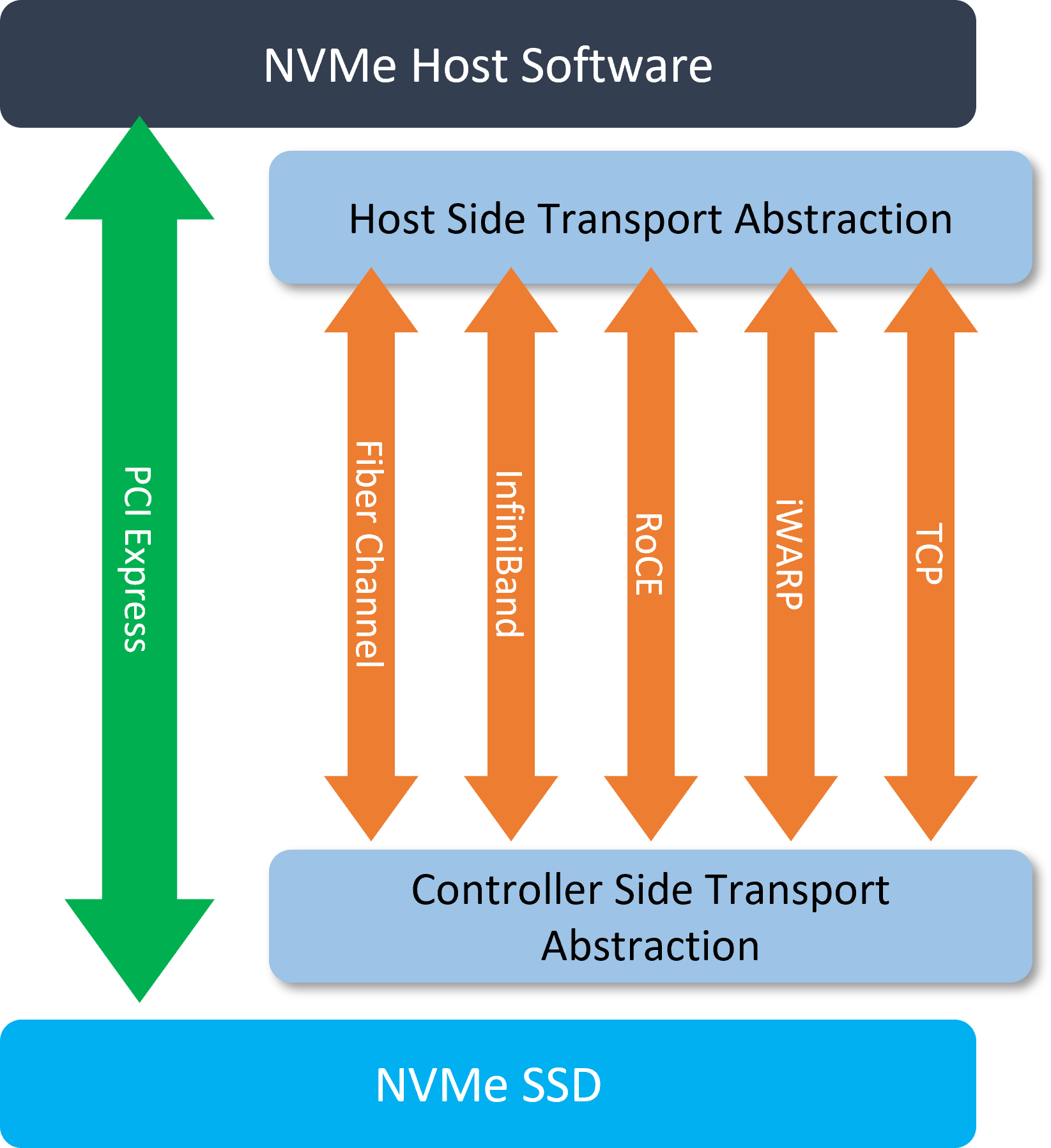

NVMe also give better scalability to storage performance by altering numbers of PCIe lanes. Nonetheless, the NVM Express Work Group introduces the NVMe Over Fabric (NVMe-oF) specification, the storage network protocol to further scale out storages by disaggregation and access of storage media over network fabrics. The most common implementations of NVMe-oF protocols include NVMe over InfiniBand, NVMe over Fiber channel, NVMe over RoCE, NVMe over iWARP, and the latest NVMe over TCP.

New semiconductor processing technology will give storage media even higher capacity and performance, that means the fabrics will have to keep up with the performance for the processors to access the storage, or else they are going to be the bottleneck in the system.

Is PCIe fabric a viable option for NVMe-oF application? PCIe fabric has natural advantages as NVMe utilizes PCIe buses directly. With PCIe fabric, data can be transferred between host CPU and flash storage using NVMe protocol from end to end, without any protocol translation that increases the latency. In addition, PCIe is almost universal in any datacenter, it can be easily adapted into current datacenter infrastructures.

The fastest InfiniBand variation (12x link EDR IB) offers 300Gb/s bandwidth and latency measured in nanoseconds. In comparison, the up-coming PCIe 5.0 spec suggests that the interface offers 4GB per lane bandwidth and latency also measured in nanoseconds. That means a x8 PCIe5.0 link can almost match the fastest IB while a x16 link almost doubled the bandwidth. Fiber channel offers up to 256Gb/s and latency less than 10 microseconds. The latest Ethernet switch reaches 400Gb/s and latency from 50 to 125 microseconds. Performance wise, PCIe and InfiniBand stood out. However, PCIe fabric would not require specialized hardware as InfiniBand would, therefore the cost to adopt PCIe network is much lower than an InfiniBand network.

One downside of PCIe network is the distance. In PCIe4.0, the maximum length that the signal can travel is around 2 meters through copper cables, and that is significantly shorter compared to FC or Ethernet that can be extended over 100 meters. It would be more difficult to transport signals over distance in PCIe5.0/ Therefore, given the current PCIe fabric technology, the ideal situation to adopt PCIe fabric would be in a smaller cluster with high-performance requirement.

Perhaps future interconnect technology will enable longer distance for PCIe signal transmission, when it happens, PCIe fabric would be the become the most cost-efficient option for high-performance NVMe-oF system.