Cost-efficient Composable Infrastructure - Software, Physical, or Mixed Composable?

Explore the benefit of hardware and software integrated composable approach and how it address the emerging demand on composable infrastructure.

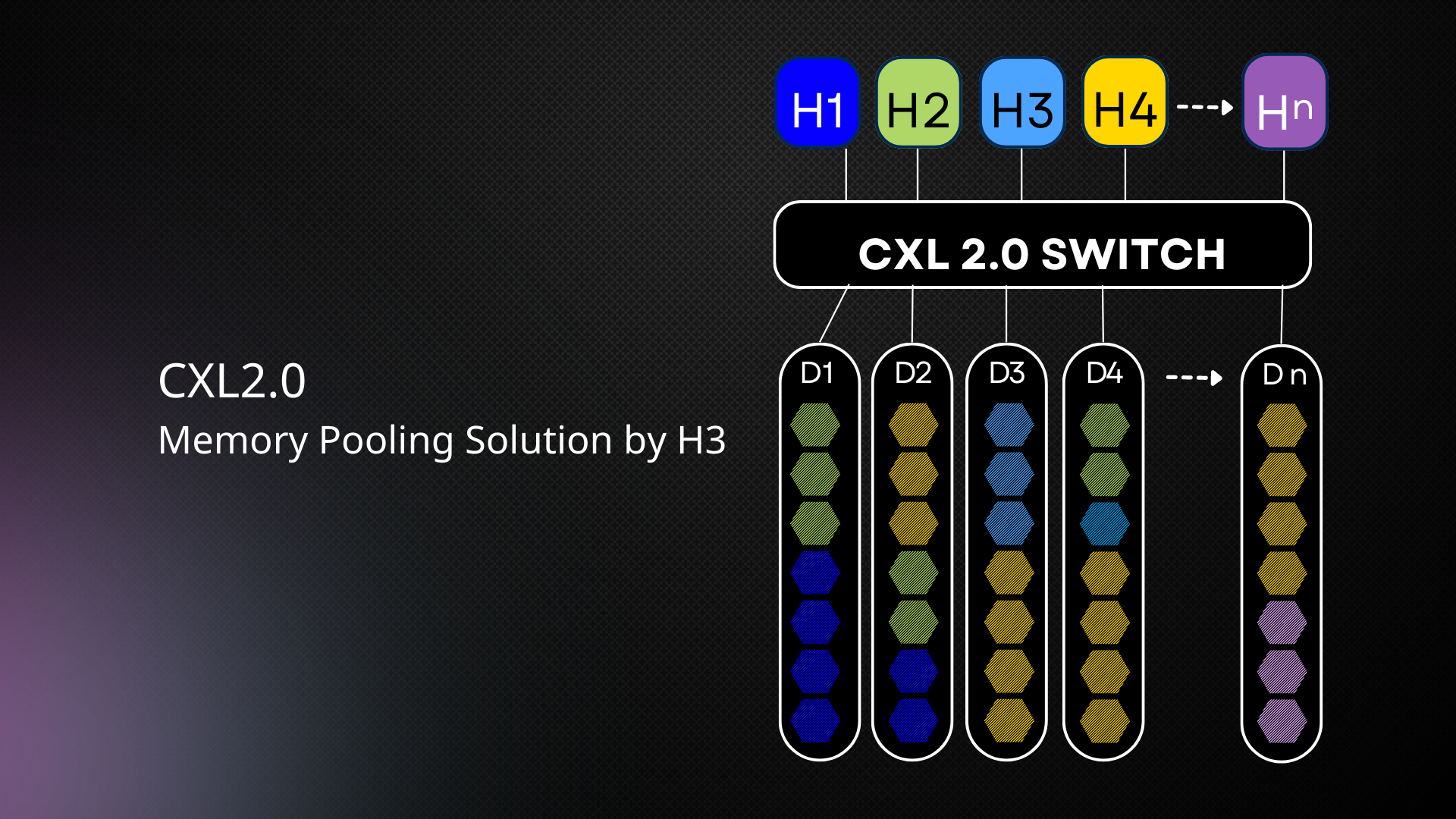

Compute Express Link (CXL) is a high-speed interconnect technology for data center acceleration and memory pooling. It establishes a fast, low-latency connection between CPUs, GPUs, and other accelerators, enabling memory pooling. With CXL, multiple devices can access a shared pool of memory, which can be dynamically allocated based on workload requirements. This memory architecture enhances data sharing and coherency across different computing resources.

H3, following the CXL protocol, offers memory pooling solutions for efficient memory management in data center environments. These solutions utilize high-speed CXL to create a unified memory pool accessible by various computing resources. This approach improves resource utilization, reduces latency, and provides increased scalability. AI/ML workloads, which often require large amounts of memory, can particularly benefit from this solution.

To effectively implement memory pooling, a high-speed interconnect technology like CXL is necessary for fast data transfer. The memory pooling system should support efficient management mechanisms to minimize overhead and optimize memory usage. Data coherency and synchronization, such as CXL.cache and CXL.mem, are crucial for consistent and synchronized data access. Lastly, the memory pooling solution should be scalable to accommodate growing memory requirements and increasing numbers of computing devices.

Memory pooling offers various features that contribute to improved system performance. It optimizes memory utilization by reducing fragmentation and grouping memory blocks of similar sizes into pools. This solution minimizes wasted space and enhances overall system performance. The shared memory architecture allows multiple devices to access a shared memory pool, promoting resource sharing and coherency among different compute resources. It facilitates efficient data sharing and synchronization, resulting in optimized data-processing efficiency. Besides, memory pools can be dynamically allocated based on workload requirements, providing flexibility in resource utilization. This dynamic memory allocation ensures optimal performance under different conditions. The high-speed CXL interconnect technologies enable fast data transfer between devices and the memory pool, further enhancing system performance and reducing latency. Memory pooling simplifies memory management tasks, reduces overhead, and streamlines application development and maintenance processes by allowing multiple devices to access the same memory pool.

Despite its benefits, memory pooling presents challenges like data coherency and synchronization. Robust synchronization mechanisms are required when multiple devices access a shared memory pool. Efficient memory allocation algorithms are needed to optimize memory usage and minimize fragmentation within memory pools. Managing memory pools across multiple devices introduces complexity and requires well-defined software frameworks and protocols. Scalability and adaptability are crucial to embrace the changing workload requirements and to ensure optimal performance.

H3 has positioned itself uniquely in the market with three generations of composable solutions, which have been tested and proven reliable according to customer feedback. With a solid tier 1 customer base, H3 has the experience and knowledge to offer qualified services to large enterprises. Strong partnerships with leading CPU and system vendors provide technical support. By the end of 2023, H3 plans to launch composable memory solutions, establishing itself as the frontier of memory pooling and sharing. H3 is ready to embrace the era of memory pooling and drive innovation forward.