CXL2.0 Memory Pooling Solution by H3

Announce the coming CXL 2.0 composable solution.

In this blog, I'll explore why people expect

CXL (Compute Express Link) to become the mainstream choice for memory

pooling systems. With the ever-growing cloud infrastructure, widespread 5G

network deployment, and explosive demand for artificial intelligence

applications, the demands for memory also has increased. The cost of memory has

also risen sharply. Finding a solution to reduce the cost of memory to help

with this problem became a top priority.

Research shows that memory costs continue to

increase as a percentage of server overhead. In 2012, memory costs accounted

for 15% of server overhead, but in 2021, this proportion soared to 37%. Such

rising costs challenge hyperscalers like Amazon, Azure, Google, and Meta.

Therefore, they actively promote measures to reduce memory overhead, which has

forced them to adopt CXL. With the solid financial backup and commitment of

cloud hyper-scale computing players such as AWS, Azure, and Google, the CXL

consortium was finally formed in this trend. The current development status of

the CXL Consortium includes major CPU suppliers, CXL memory module

manufacturers, and system suppliers. Next, I will introduce Intel's vision for

computing.

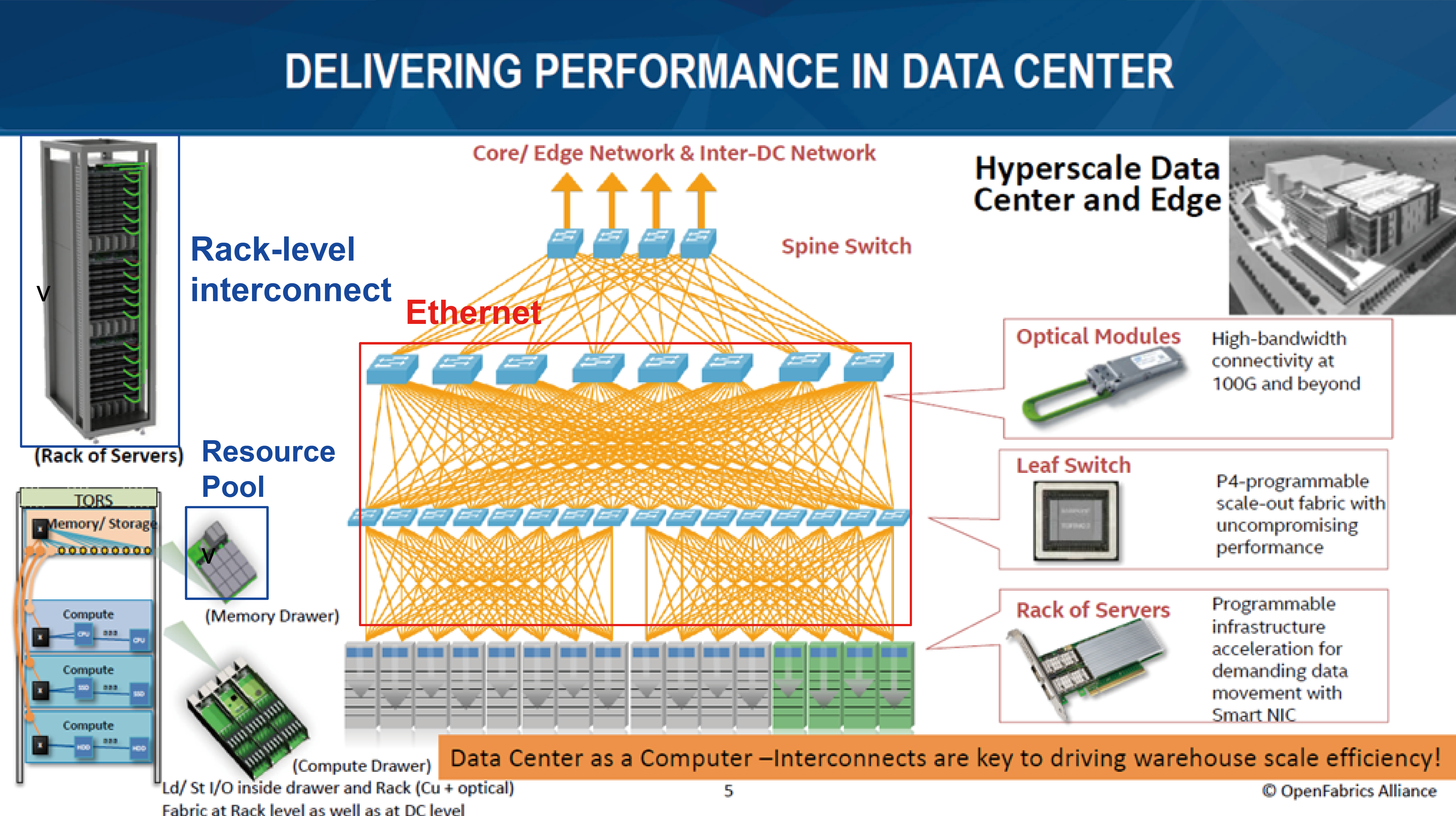

The picture below is Intel's vision for

computing. The red box is ethernet and is responsible for communication across

racks. The blue box is responsible for communication within the rack level,

which is the most intriguing part of CXL. Memory is separated from compute

nodes. All of these chassis connect via CXL. Intel believes that all resources

can be composable. CPUs are in the compute nodes. Memories are in the memory

pool. Storages are in separate chassis. GPUs/FPGAs are in the accelerator box.

It is the infrastructure that can be disaggregated or disassembled.

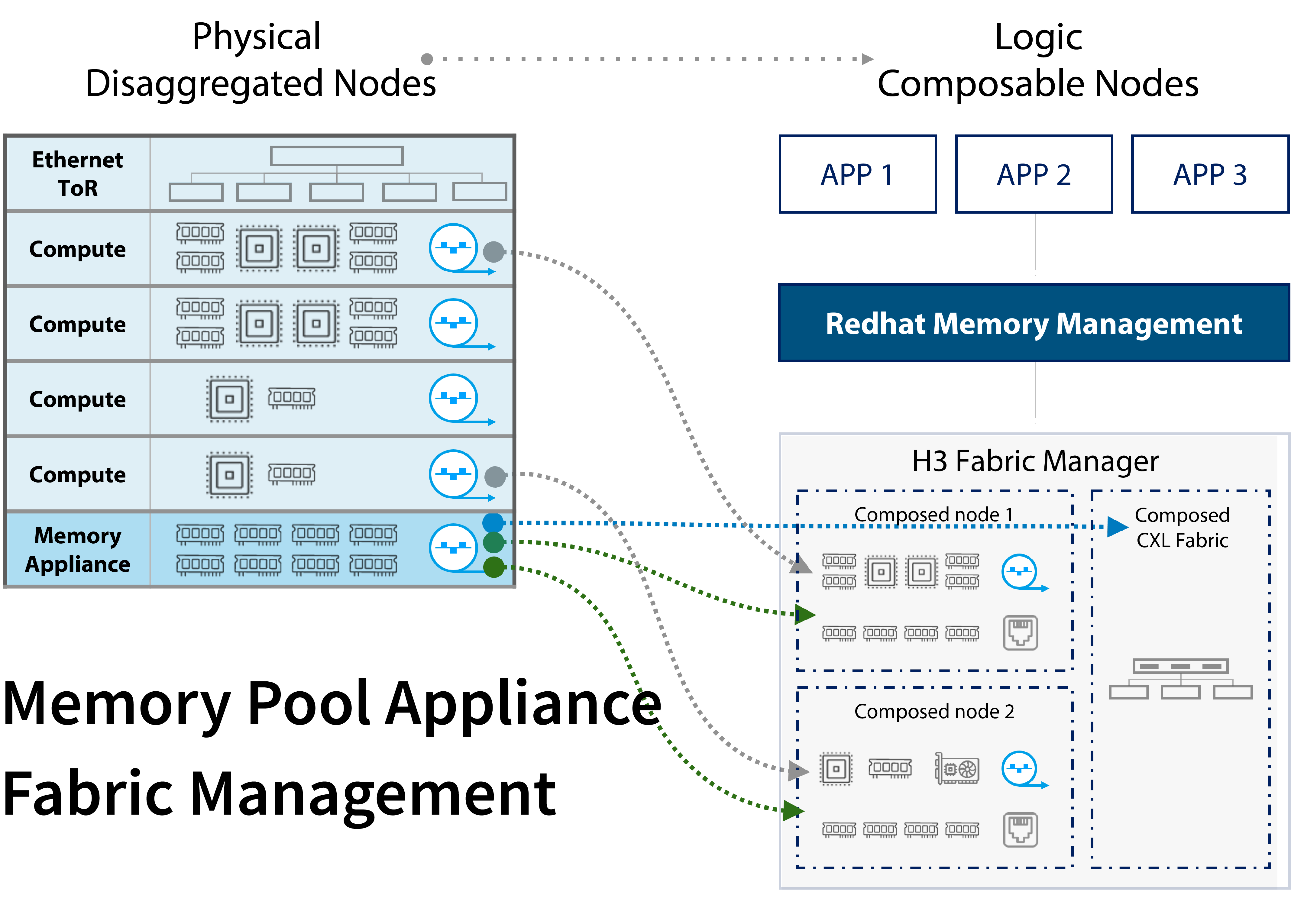

The rack level is the same as the picture on

the left. Computes and memory nodes are housed in separate chassis and

connected by PCIe Gen5 external cables. When memory gets allocated to a

connected host, the server sees this CXL memory as its own. The figure on the

right visualizes the Logic Composable Nodes, where the memories stand by for

different application uses. CXL Memory can be allocated to connected servers when needed and unmapped when no longer in use.

CXL Pooling and Sharing Topology

The figure on the right stands for the system

architecture of the memory pool. The left one portrays the system architecture

of memory sharing.

CXL Expansion Topology

This picture represents the Memory expansion

system. Memory expansion can be one or more of the memory systems.

The video is about CXL GUI demo. This video

demonstrates how to do memory pooling. Four ports are configured for hosts and

twelve ports for CXL memories in the system.

When dividing the CXL memory modules into

smaller memory blocks, you may click on the CXL memory module to create some. When configuring a memory block, you can select the size of the

memory block and its attributes, such as 'shared' or

'private.' If the memory block is assigned as 'shared,' multiple hosts can

perform read and write operations on the memory block. Note, however, that we

do not manage cache coherency.

Then you may check out from the hosts' view.

When clicking on a host port, the memory block on the right belongs to that

host. The memory block in the middle is available for the host to use. If

memory blocks are shareable, these memory blocks can be mapped to multiple

hosts. On the top bar, the Host Memory Address is the host memory address and

which memory blocks are allocated to which memory section. This GUI is

installed on the management CPU.

Will CXL become the mainstream technology in

the future? Efficient resource management facilitated by CXL has answered this question by analyzing the memory pool architecture and presenting a GUI

demonstration. The CXL switch plays a significant role in configuring switch

ports and memory mapping. The GUI demo shows the memory modules divided into

smaller memory blocks. The user can select the size and properties of the

memory block, including options for shared or private memory. The GUI makes it

easy for users to view and manage memory blocks.

Computer Express Link (CXL), as the

mainstream choice of the memory disaggregation system, has a promising future.

Backed by growing memory demand and the support of key industry players, CXL is

poised to revolutionize memory management. Its compatibility with existing

software is an influential advantage for hyperscalers. The efforts of the CXL

Consortium, coupled with advances in memory pooling and sharing technologies,

demonstrate the potential of CXL to transform computing architecture.