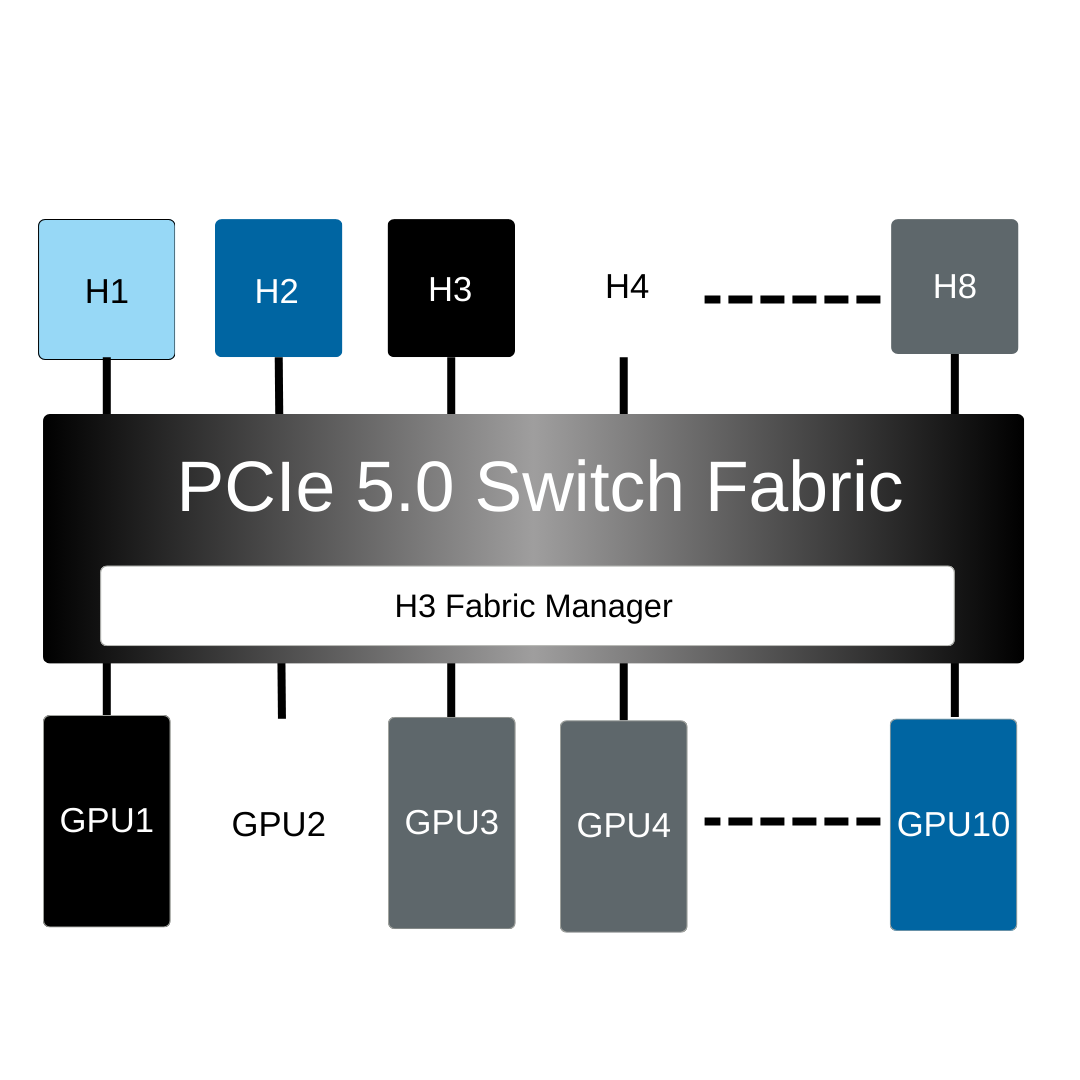

Overview

The Falcon 5012 supports two scenarios: 10 GPUs or 8 GPUs + 4 Network cards, configurable in Standard Mode or Advanced Mode. Advanced Mode, requiring a Premium License, enables flexible, high-performance deployments.

1. 10 GPUs

| Application scenarios | Standard Mode | Advanced Mode |

| 10 GPUs | a. 2 Hosts + 10 GPUs | b. 4 Hosts + 10 GPUs |

| c. 6 Hosts + 8 GPUs |

2. 8 GPUs (FHFL 312mm length) + 4 Network cards

| Application scenarios | Standard Mode | Advanced Mode |

| 8 GPUs + 4 Network cards | d. 2 Hosts + 8 GPUs + 4 Network cards | e. 4 Hosts + 8 GPUs + 4 Network cards |

| f. 6 Hosts + 8 GPUs + 2 Network cards |

| g. 8 Hosts + 8 GPUs |

1. 10 GPUs

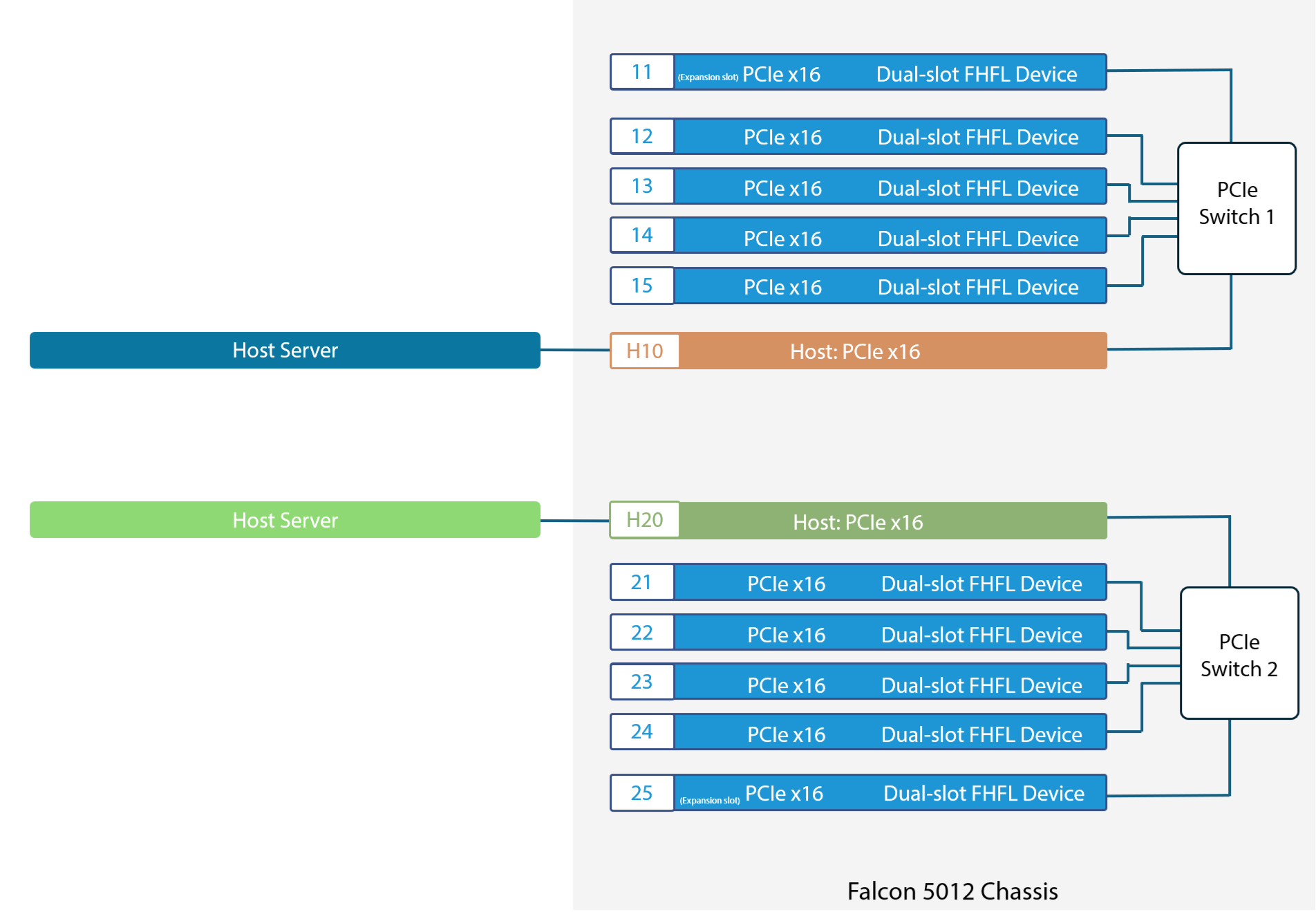

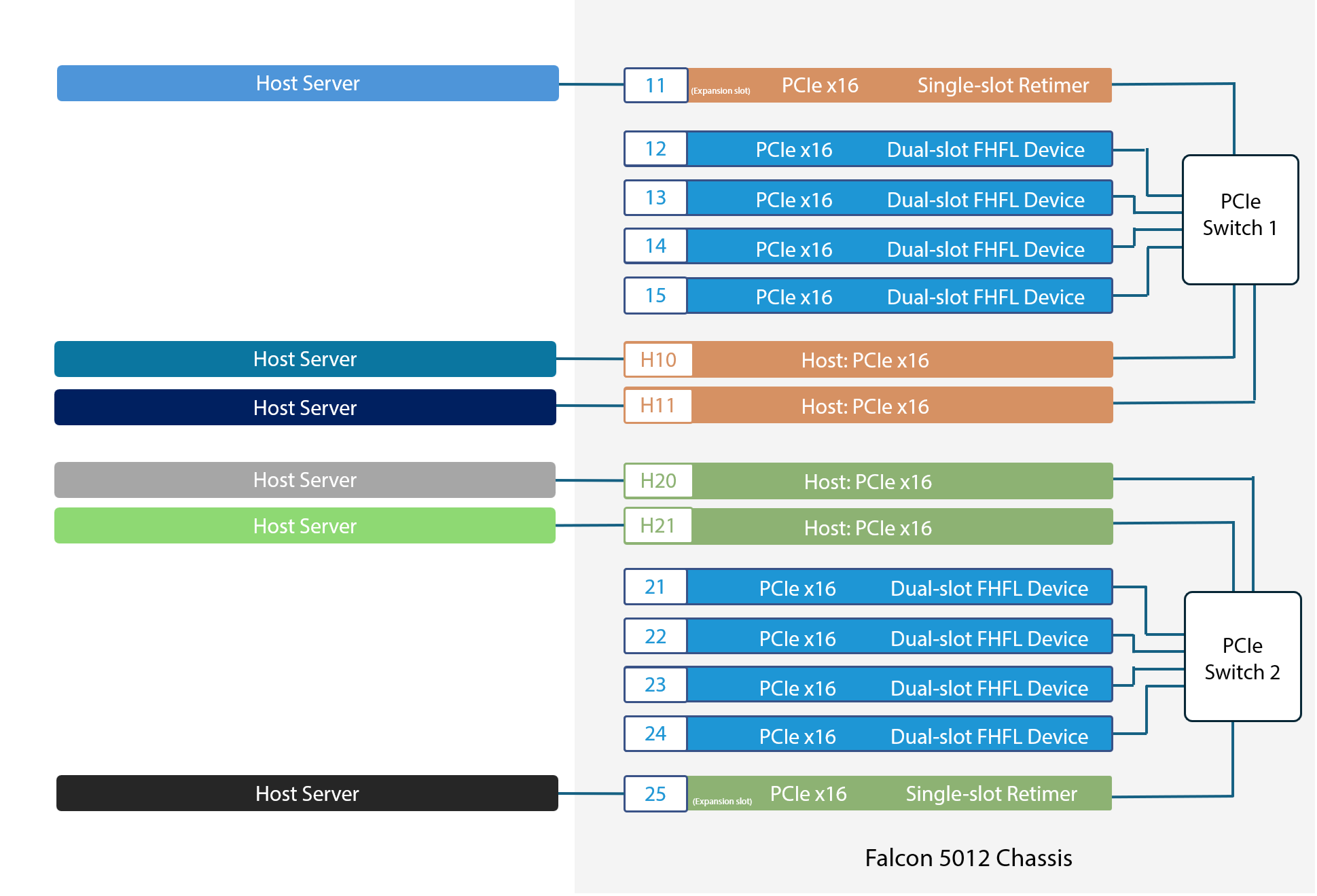

a. Standard Mode - 2 Hosts + 10 GPUs

| Configuration | Chassis-wide configuration | Per-switch configuration |

| Host port + Device port | 2 Hosts + 10 GPUs | 1 Host + 5 GPUs |

In the 10 GPUs scenario, each host can independently access the GPUs connected to its switch under Standard Mode.

Figure 1. Block diagram of Standard Mode architecture topology with 2 x16 hosts and 10 GPUs

In the 10 GPUs application scenario under Advanced Mode, two configurations are available.

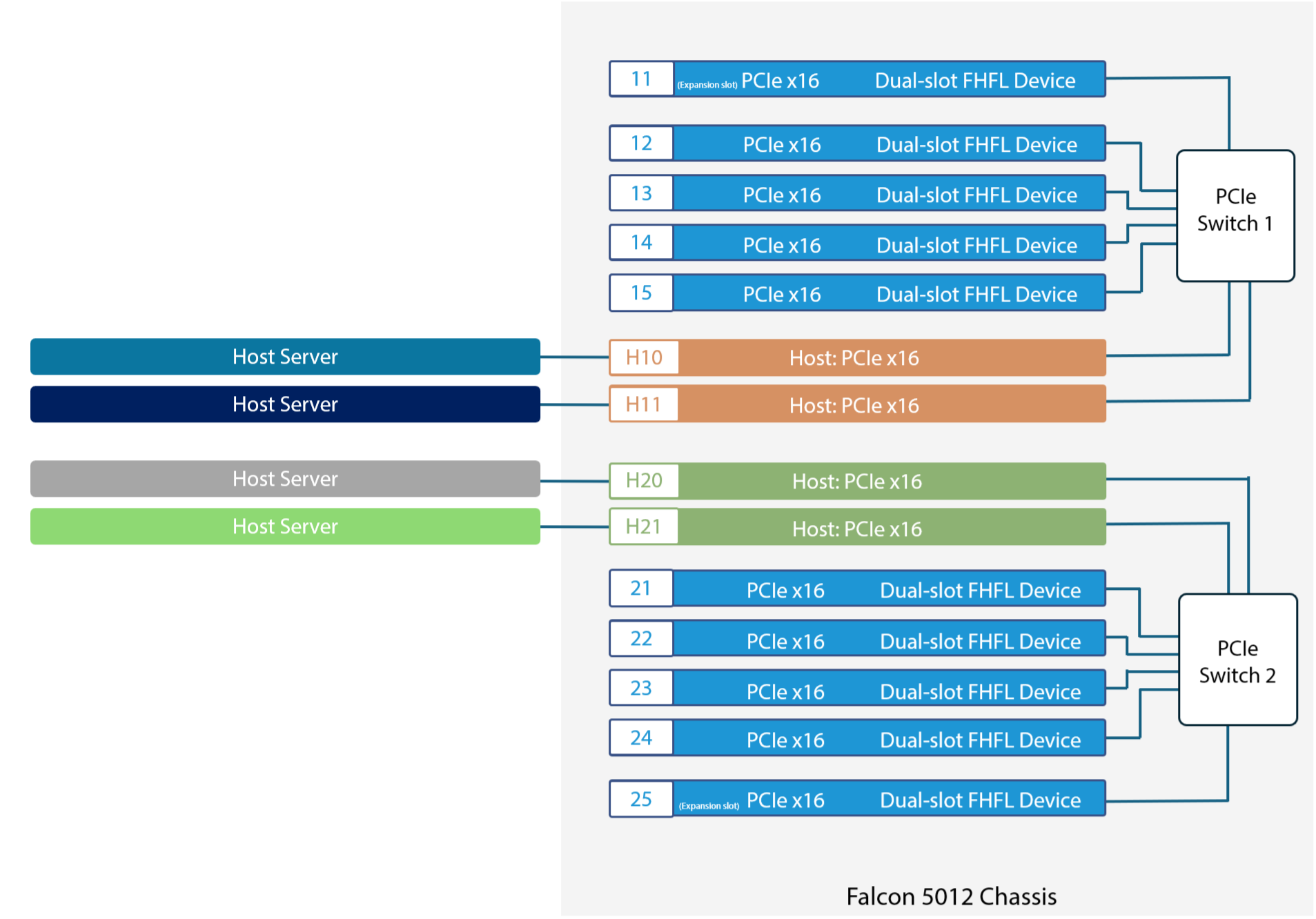

b. Advanced Mode - 4 Hosts + 10 GPUs

| Configuration | Chassis-wide configuration | Per-switch configuration |

| Host port + Device port | 4 Hosts + 10 GPUs | 2 Hosts + 5 GPUs |

In Advanced Mode, each switch supports 2 hosts and 5 FHFL devices. With 2 switches, the system can connect 4 hosts and 10 FHFL devices.

Figure 2: Block diagram of Standard Mode architecture topology with 4 x16 hosts and 10 GPUs

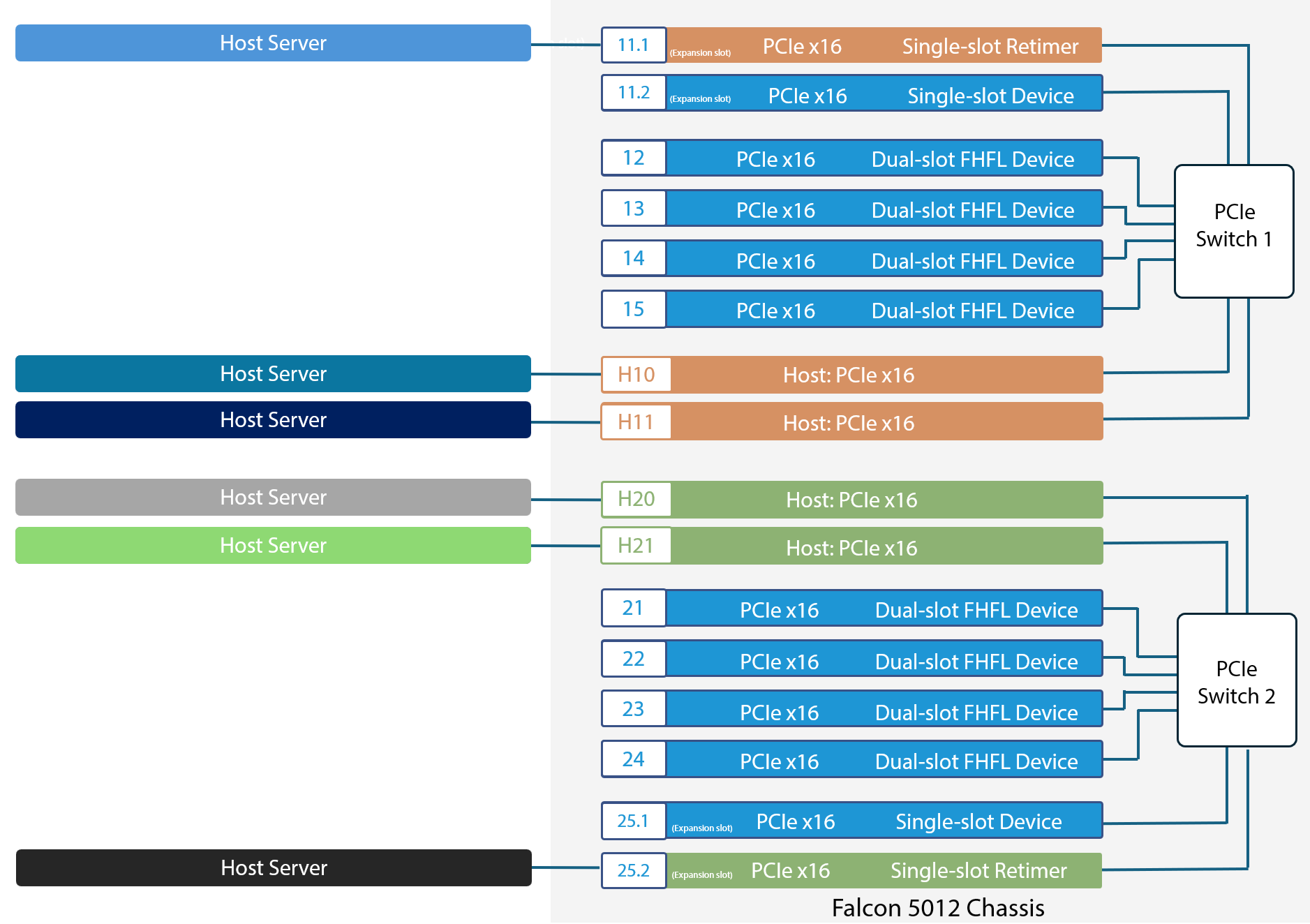

c. Advanced Mode - 6 Hosts + 8 GPUs

| Configuration | Chassis-wide configuration | Per-switch configuration |

| Host port + Device port | 6 Hosts + 8 GPUs | 3 Hosts + 4 GPUs |

External expansion slots can be connected to retimers to support additional hosts. In this configuration, each switch supports 3 hosts and 4 FHFL devices, allowing 2 switches to connect a total of 6 hosts and 8 FHFL devices.

Figure 3. Block diagram of Advanced Mode architecture topology with 6 x16 hosts and 8 GPUs

2. 8 GPUs + 4 Network cards

d. Standard Mode-2 Hosts + 8 GPUs + 4 Network cards

| Configuration | Chassis-wide configuration | Per-switch configuration |

| Host port + Device port | 2 Hosts + 8 GPUs + 4 Network cards | 1 Host + 4 GPUs + 2 Network cards |

In the 8 GPUs + 4 Network cards scenario under Standard Mode, each host can independently access 4 GPUs and 2 Network cards on its connected switch.

Figure 4. Block diagram of Standard Mode architecture topology with 2 x16 hosts and 8 GPUs + 4 NICs

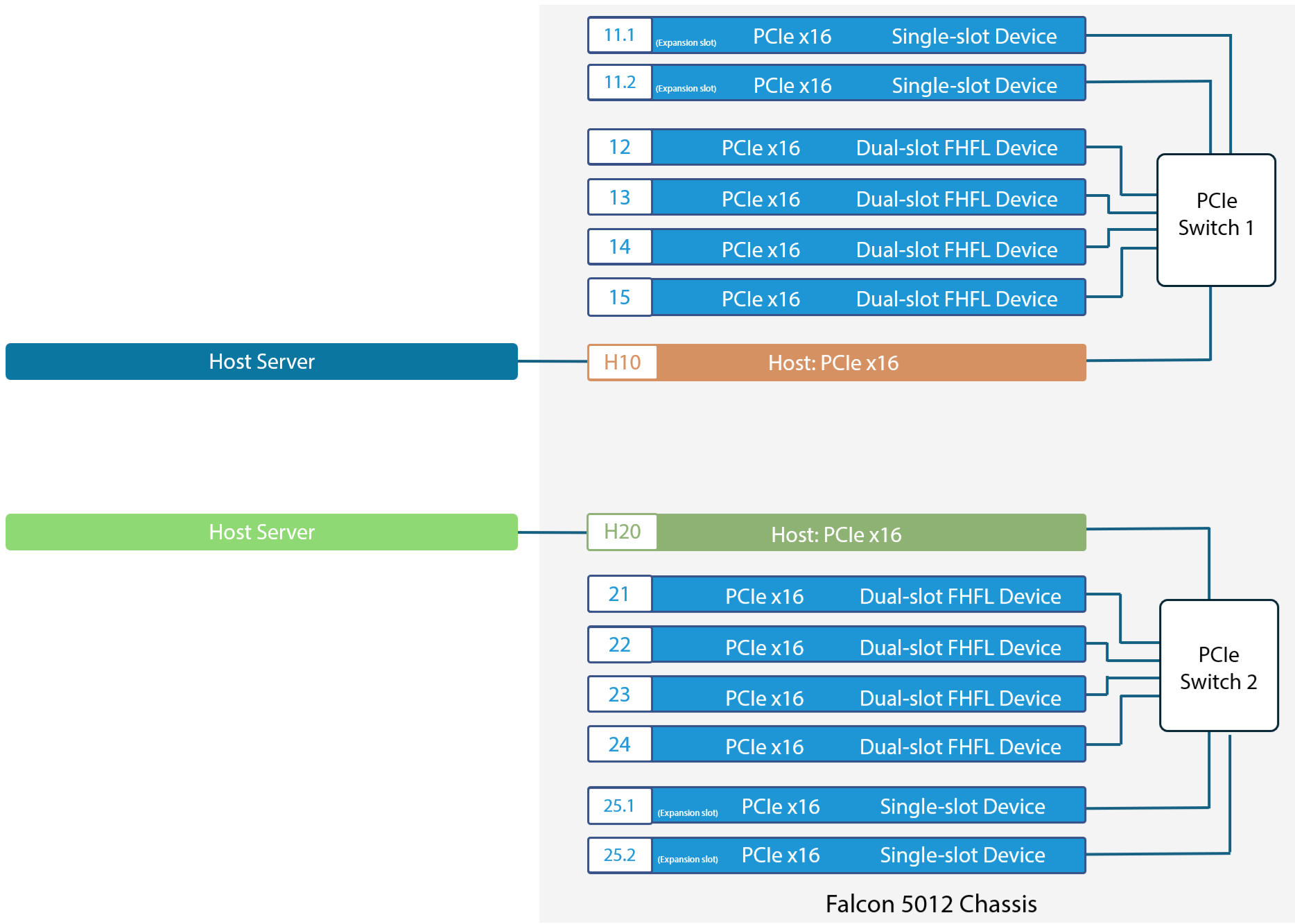

e. Advanced Mode - 4 Hosts + 8 GPUs + 4 Network cards

| Configuration | Chassis-wide configuration | Per-switch configuration |

| Host port + Device port | 4 Hosts + 8 GPUs + 4 Network cards | 2 Hosts + 4 GPUs + 2 Network cards |

In Advanced Mode, each switch at least supports 2 hosts, 4 FHFL devices, and 2 single-slot devices, enabling the system to connect 4 hosts, 8 GPUs, and 4 Network cards.

Figure 5. Block diagram of Advanced Mode architecture topology with 4 x16 hosts and 8 GPUs + 4 NICs

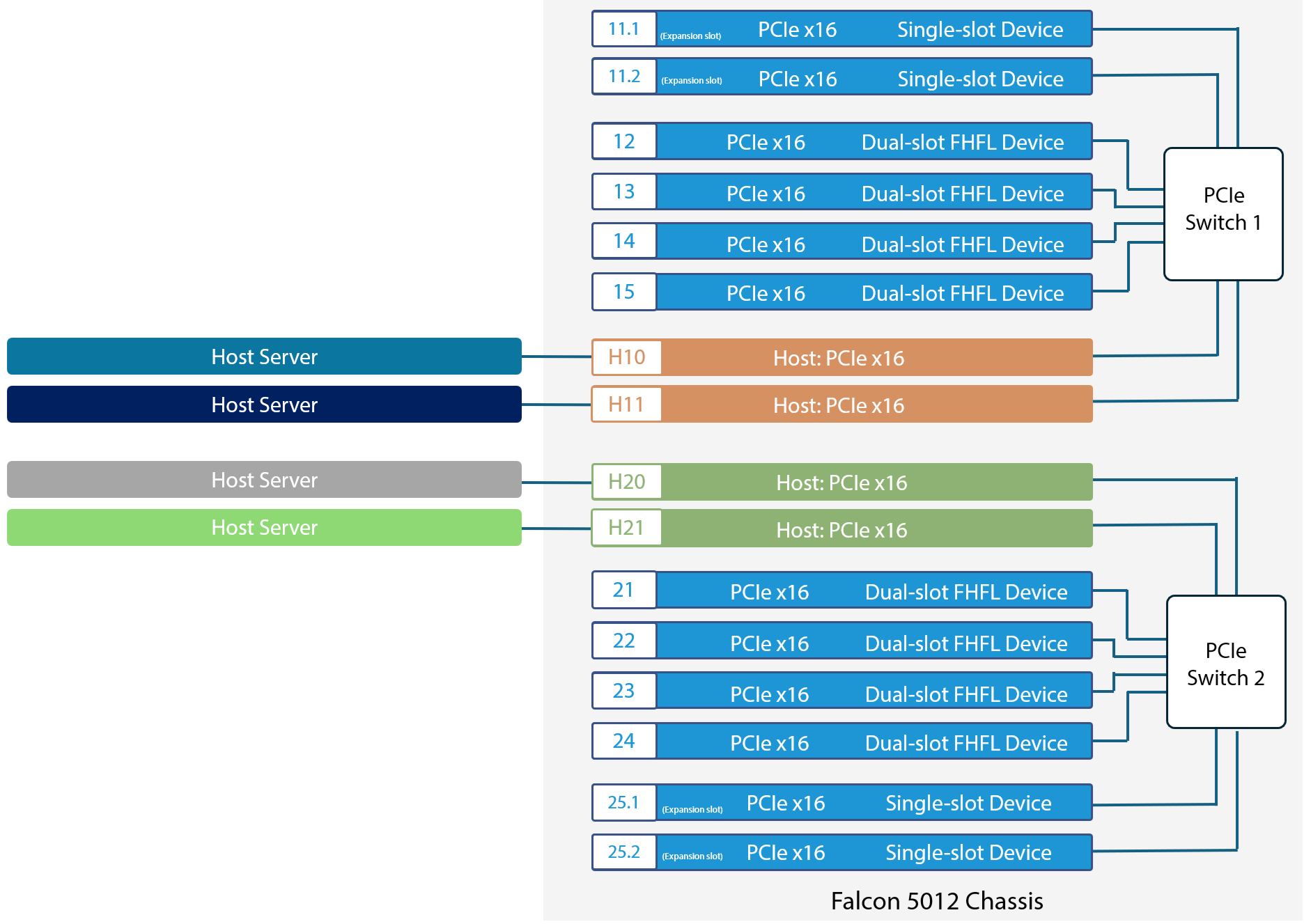

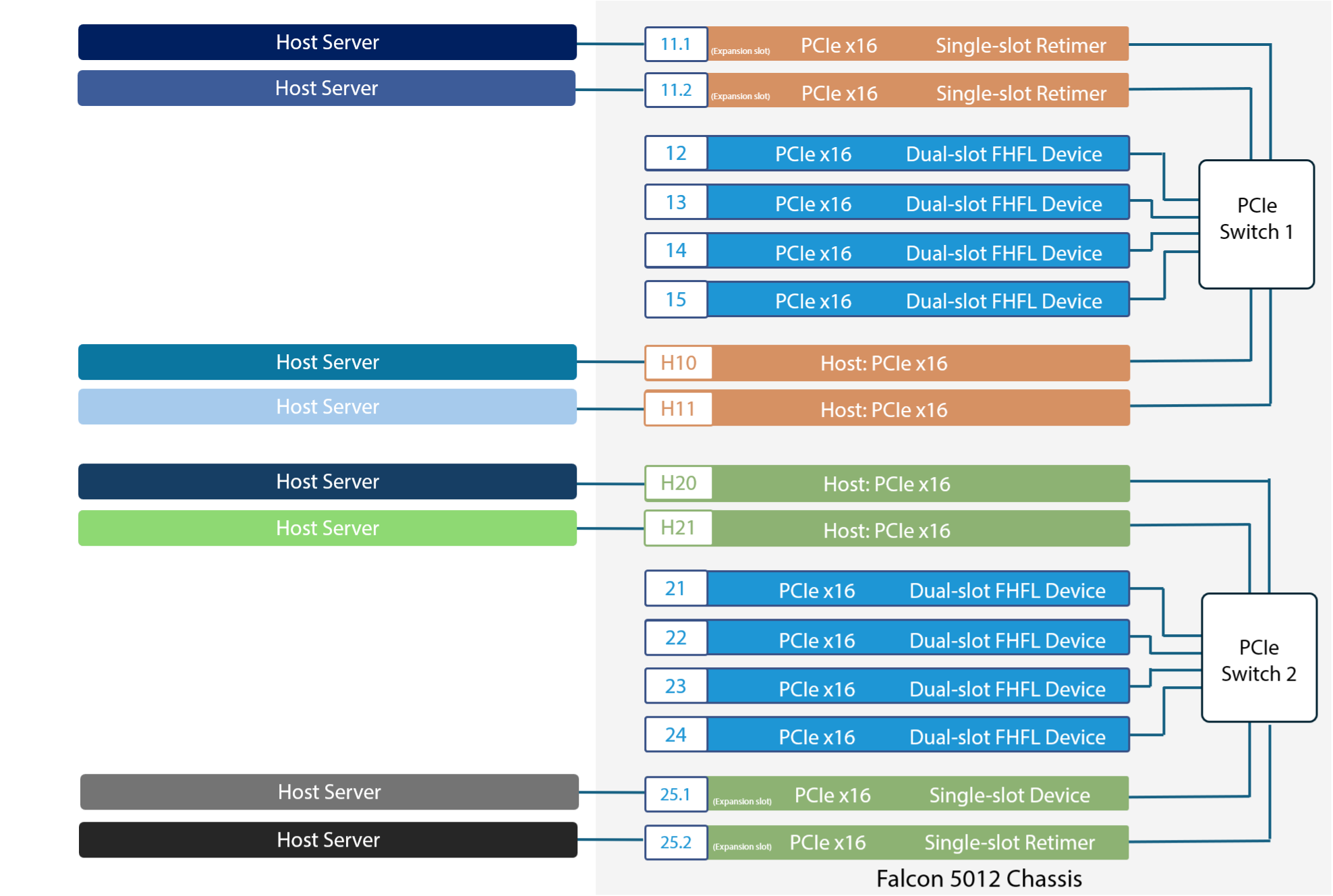

f. Advanced Mode - 6 Hosts + 8 GPUs + 2 Network cards

| Configuration | Chassis-wide configuration | Per-switch configuration |

| Host port + Device port | 6 Hosts + 8 GPUs + 2 Network cards | 3 Hosts + 4 GPUs + 1 Network card |

Users can designate one of the external expansion slots to connect additional hosts. In this configuration, each switch supports 3 hosts, 4 FHFL devices, and 1 NIC, enabling the chassis to support a total of 6 hosts, 8 FHFL devices, and 2 single-width devices.

Figure 6. Block diagram of Advanced Mode architecture topology with 6 x16 hosts and 8 GPUs + 2 NICs

g. Advanced Mode - 8 Hosts + 8 GPUs

| Configuration | Chassis-wide configuration | Per-switch configuration |

| Host port + Device port | 8 Hosts + 8 GPUs | 4 Hosts + 4 GPUs |

Users can designate external expansion slots to connect additional hosts. In this configuration, each switch supports 4 hosts, and 4 FHFL devices, enabling the chassis to support a total of 8 hosts, and 8 FHFL devices.

Figure 7. Block diagram of Advanced Mode architecture topology with 8 x16 hosts and 8 GPUs

For more information, please contact sales@h3platform.com for license purchase.